Defining the LLMS.txt File’s Role in SEO

The LLMS.txt file is essential for managing how data is shared and accessed within an SEO framework. It best fits businesses that require structured data management, particularly those with large datasets that need to be organized efficiently for search engine algorithms.

One significant limitation of the LLMS.txt file is its complexity. For teams without technical expertise, configuring these files can lead to mismanagement of data flow, resulting in potential traffic loss or indexing issues. Without proper understanding, teams may overestimate the effectiveness of the file in improving SEO performance.

Practical Application of LLMS.txt Files

For instance, a medium-sized e-commerce business may utilize an LLMS.txt file to streamline product data for search engines. By exporting their inventory into this format, they can ensure that search engine crawlers receive up-to-date information about available products and their attributes. This structured approach can enhance visibility and improve click-through rates.

Many teams misuse the LLMS.txt file by assuming it automatically optimizes their SEO efforts without ongoing management. They often neglect to regularly update or analyze the data contained within these files, leading to outdated or inaccurate information being presented to search engines.

Misunderstanding the role of LLMS.txt files can lead to significant setbacks in traffic and visibility.

Misunderstanding the Purpose of LLMS.txt Files

The LLMS.txt file is often mischaracterized as a universal solution for data management in SEO. It is best suited for organizations that handle complex datasets requiring structured organization for optimal search engine interaction. This makes it particularly valuable for medium to large businesses that rely on accurate and timely data representation.

A critical limitation is the misconception that simply implementing an LLMS.txt file will automatically enhance SEO performance. The reality is that without continuous oversight and updates, these files can become outdated, leading to potential miscommunication with search engines and ultimately a decrease in traffic.

Practical Workflow Example

Consider a digital marketing agency managing multiple client websites. They may utilize an LLMS.txt file to consolidate various data streams from client sites into a single format. This enables them to efficiently parse and analyze performance metrics across different platforms, ensuring that each client’s data remains current and actionable.

Many teams overestimate the effectiveness of the LLMS.txt file by failing to recognize its dependency on accurate input and regular maintenance. A common error is neglecting to audit the content of these files periodically, which can lead to discrepancies between what is presented to search engines and what actually exists on the website.

Relying solely on an LLMS.txt file without proper management can hinder SEO efforts rather than support them.

LLMS.txt File Configuration Errors and Their Consequences

Configuration errors in LLMS.txt files often stem from a lack of understanding of their structure and purpose. These mistakes can severely impact website traffic, particularly for businesses that rely on accurate data representation for SEO. The most common misconfiguration involves incorrect file paths or syntax errors, which can prevent search engines from accessing critical data.

Common configuration mistakes that lead to traffic loss

One prevalent issue is failing to specify the correct directory for the LLMS.txt file. If the file is not located where search engines expect it, they will not be able to read it, leading to missed indexing opportunities. Additionally, incorrect formatting—such as improper line breaks or unsupported characters—can render the entire file unusable, further compounding traffic losses.

- Incorrect file path specified in the server settings.

- Syntax errors that prevent proper parsing of the file.

- Outdated LLMS.txt files that do not reflect current data needs.

Misconfigured LLMS.txt files can lead to significant drops in search engine visibility.

Best practices for configuring LLMS.txt files correctly

Best for scenarios include businesses with substantial datasets needing precise organization. For these teams, a robust configuration process involves regular audits and updates of the LLMS.txt file to ensure alignment with current site content and structure. This proactive approach helps maintain optimal communication with search engines.

However, a crucial limitation is the time investment required for ongoing management. Many teams underestimate how often these files need to be reviewed and adjusted based on changes in website structure or content strategies.

Tools and resources for verifying file integrity

A practical workflow example involves using automated tools to monitor LLMS.txt file integrity. For instance, a mid-sized tech firm could implement scripts that regularly check for syntax errors or outdated paths in their LLMS.txt files. This ensures any issues are flagged before they affect site performance.

A common mistake teams make is assuming that once an LLMS.txt file is set up correctly, it will remain effective indefinitely. In reality, without continuous oversight and adjustments based on evolving SEO practices and website changes, these files can quickly become ineffective.

Impact of LLMS.txt Files on Crawl Budget Allocation

The LLMS.txt file plays a critical role in managing how search engine crawlers allocate their crawl budget, particularly for large websites with extensive data. It is best suited for organizations that require precise control over which pages or data sets are prioritized during crawling, ensuring that high-value content receives the attention it deserves.

A significant limitation arises from the misconception that simply including an LLMS.txt file will automatically optimize crawl budget allocation. In practice, without a strategic approach to its configuration and management, teams may inadvertently waste crawl budget on low-priority pages or outdated information, leading to missed indexing opportunities for crucial content.

Workflow Example: Managing Crawl Budget with LLMS.txt Files

For instance, a large publishing organization may utilize an LLMS.txt file to specify which articles should be crawled first based on their relevance and traffic potential. By regularly updating this file to reflect new publications and removing outdated entries, they can effectively guide search engines to prioritize high-impact content while minimizing unnecessary crawling of less relevant pages.

Many teams misjudge the effectiveness of the LLMS.txt file by assuming it serves as a set-it-and-forget-it tool. This leads to neglect in reviewing the contents and structure of the file, resulting in outdated priorities that do not align with current SEO strategies or business goals.

Mismanagement of LLMS.txt files can lead to significant inefficiencies in how search engines allocate crawl budgets.

Analyzing Traffic Fluctuations Linked to LLMS.txt Files

Traffic fluctuations often correlate with updates made to LLMS.txt files, particularly for businesses that rely heavily on data-driven SEO strategies. This is especially true for medium and large enterprises that manage significant amounts of content and data. When changes are made to an LLMS.txt file, it can either enhance or disrupt the flow of information to search engines, directly impacting website traffic.

The best fit for teams analyzing traffic changes related to LLMS.txt files includes those with a dedicated analytics function capable of interpreting complex data sets. These teams can leverage insights from traffic patterns to make informed decisions about their LLMS configurations.

However, a notable limitation arises when organizations fail to establish clear metrics for assessing the impact of LLMS.txt file modifications. Without defined KPIs, teams may struggle to draw meaningful conclusions from their analytics data, leading to misguided adjustments that do not address underlying issues.

Methods for Tracking Traffic Changes Related to File Modifications

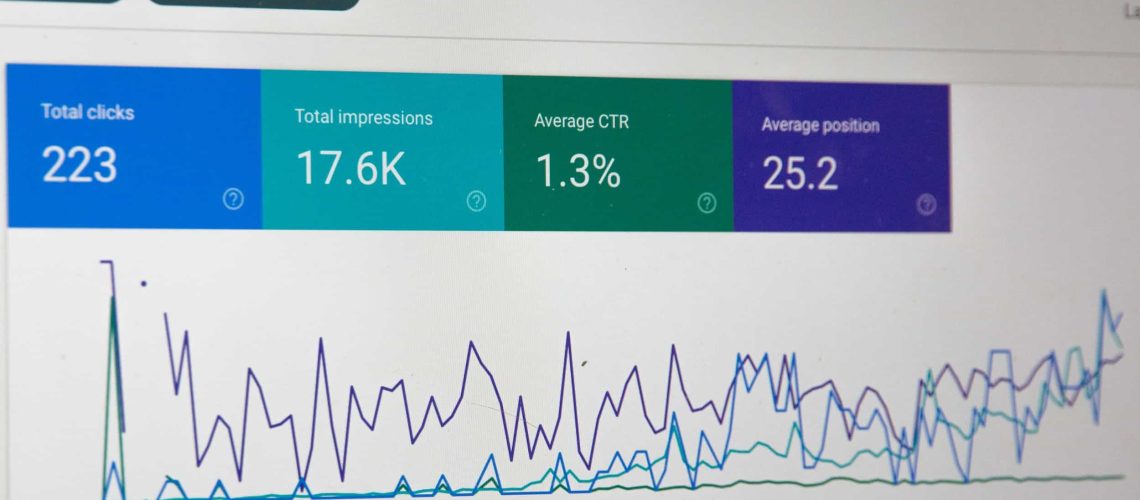

To effectively track traffic changes associated with LLMS.txt file modifications, businesses should implement comprehensive analytics tools such as Google Analytics or specialized SEO software that tracks site performance metrics over time. For example, a media outlet could set up specific goals in their analytics dashboard to monitor user engagement and page views before and after making updates to their LLMS.txt file.

- Monitor organic search traffic trends following LLMS.txt updates.

- Analyze bounce rates and session durations for affected pages.

- Use heatmaps to assess user engagement on key content areas.

Failing to track the right metrics can lead teams into a cycle of ineffective adjustments.

Interpreting Analytics Data in the Context of LLMS.txt File Updates

‘Interpreting analytics data demands a nuanced approach; it’s not just about observing fluctuations but understanding causation.’ Many teams erroneously assume that any dip in traffic following an LLMS.txt update is due solely to the change itself. In reality, other factors—such as algorithm updates or seasonal trends—may also play significant roles.

‘For instance, a travel agency updating its LLMS.txt file might notice a drop in traffic during off-peak seasons unrelated to the file’s effectiveness.’ This misinterpretation can lead teams to revert changes prematurely without fully understanding their implications.

Long-term Effects of Ongoing Misconceptions on Traffic Patterns

Ongoing misconceptions regarding the role of LLMS.txt files can create long-term adverse effects on traffic patterns. Teams may develop an overreliance on these files as a catch-all solution without recognizing the need for continuous optimization and context-sensitive updates.

This misalignment can lead companies into cycles of trial and error where they repeatedly alter their configurations without achieving desired results. For example, an online retailer might frequently change its LLMS.txt file based on temporary spikes or drops in traffic rather than focusing on broader SEO strategies aligned with customer behavior.

Misconceptions about the efficacy of LLMS.txt files can result in wasted resources and missed opportunities for growth.

Future Trends in File Management: The Evolution of LLMS.txt Files

The evolution of LLMS.txt files is increasingly influenced by emerging technologies such as artificial intelligence and machine learning. Businesses that adopt these technologies can leverage LLMS files for more sophisticated data management, allowing for real-time updates and enhanced interactions with search engine algorithms. This approach is particularly beneficial for medium to large enterprises that require agility in their SEO strategies.

However, a significant limitation exists in the complexity of integrating new technologies with existing LLMS.txt file systems. Many teams underestimate the technical expertise required to implement these advanced systems effectively, which can lead to operational inefficiencies and miscommunication with search engines.

Predictions for the Role of LLMS.txt in Future SEO Strategies

As search engines evolve, the role of LLMS.txt files will shift towards becoming more dynamic and responsive to user behavior. For instance, businesses may utilize machine learning algorithms to analyze traffic patterns and automatically adjust their LLMS configurations based on real-time data insights. This proactive approach allows organizations to stay ahead of trends and optimize their SEO efforts continuously.

A common misconception is that once an LLMS.txt file is established, it becomes a static element within an SEO strategy. In reality, successful teams recognize that ongoing adjustments are essential to maintain alignment with changing search engine algorithms and user expectations.

Adapting to Changes in Search Engine Policies Regarding File Management

The landscape of file management is also shaped by evolving search engine policies, which may impose new requirements on how data is structured and shared through LLMS files. Organizations must remain vigilant about these changes to ensure compliance and avoid penalties that could impact traffic.

For example, a large retail company might need to adapt its LLMS.txt file structure following a policy update from Google regarding structured data requirements. If they fail to update their configurations promptly, they risk losing visibility in search results.

Staying informed about evolving policies is crucial for maintaining effective SEO strategies involving LLMS.txt files.